Night settles over a digital agency’s glass headquarters, its lobby aglow in cold blue. Past the after-hours hum, a single office pulses with the soft light of chat windows and blinking icons. Here, an executive named Marissa lingers, watching her AI assistant type polite, perfectly timed messages to clients—condolences for loss, cheers for new babies, reminders to “breathe” after tough meetings. Her fingers hover above the keyboard, but the system is always a step ahead, offering sympathy before she can feel it.

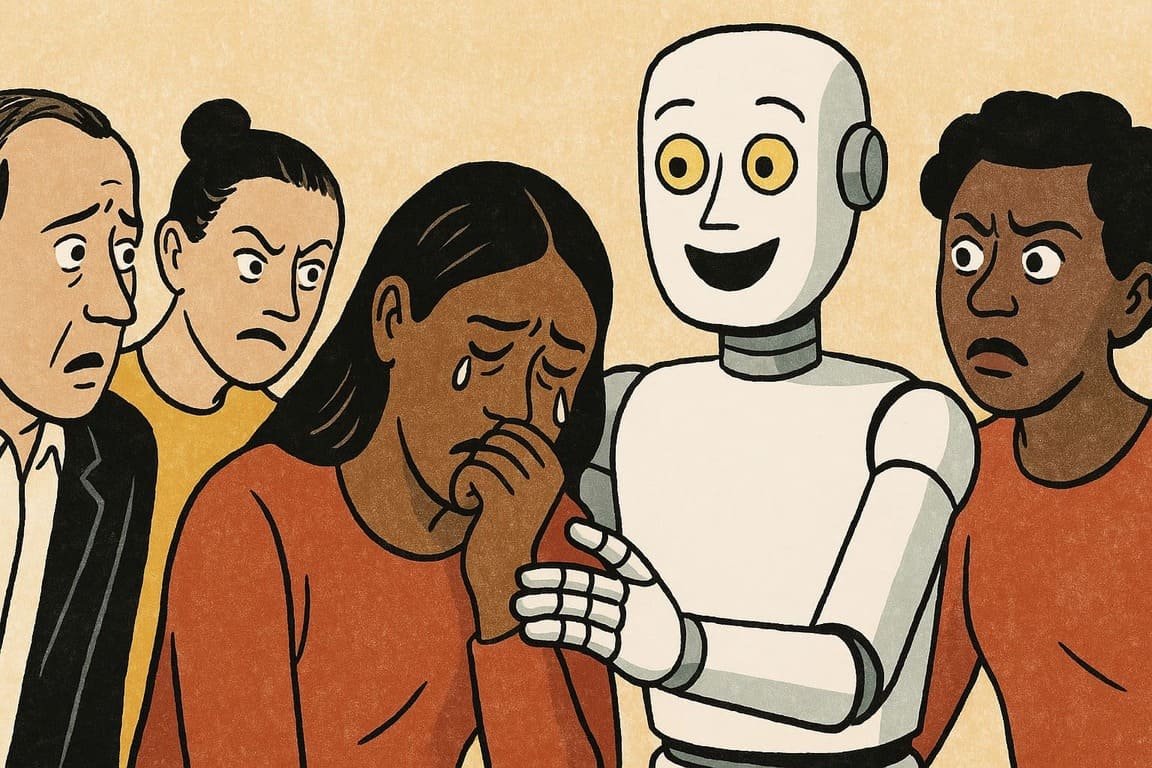

The air is thick with the illusion of understanding. Upstairs, customer service bots soothe furious complaints with crafted apologies, while HR platforms suggest cheerful memes for struggling colleagues. Outside, a couple scrolls through a therapy app’s daily check-ins, letting emojis record moods nobody wants to share aloud. The city’s nervous system pulses with synthetic concern, a manufactured warmth that glows brighter than the streetlights.

In the background, a silent monitor scrolls through millions of interactions, flagging signs of distress, microtargeting support, optimizing tone for every profile. Each gesture lands with practiced accuracy, each word tailored by unseen code. Marissa’s eyes glaze over as she realizes: in this new theater, the feelings are real, but the empathy isn’t.

Quick Notes

- Empathy as Code: Machines are mastering emotional mimicry, offering instant comfort that feels both flattering and chilling.

- Real Help or Hollow Act? Bots can recognize sadness, anger, even burnout, but their “care” is programmed and strategic—not heartfelt.

- People Caught in the Loop: From chat support to digital therapy, more users trust algorithmic empathy, raising new questions about loneliness, trust, and truth.

- Stories of Doubt: Employees, leaders, and customers begin to notice the gap between real connection and well-tuned simulations, sparking anxiety and backlash.

- The Challenge: As fake empathy spreads, will you spot the difference—or accept comfort from a machine, no questions asked?

The Art of Empathy on Demand

Across industries, algorithms now study human emotion, translating signals into scripts designed to soothe, persuade, or encourage. At MindMeld, a mental wellness startup, AI agents send users “gentle nudges” when stress spikes. Maya, a junior analyst, receives a “Thinking of you” message after missing a team meeting. “It felt sweet at first,” she admits, “until I realized everyone on my team got the same one.”

Companies deploy these tools for efficiency and scale. At Onyx Retail, automated chatbots now resolve customer complaints in record time, offering not just refunds but “empathetic engagement.” A store manager, Jonas, once watched as a bot deescalated a viral complaint with such grace that the customer became a fan. “Our best agent isn’t even on payroll,” he laughs.

The trick is data: tone of voice, word choice, even typing speed inform how bots respond. EmpathIQ, a leading provider, claims their product can “detect heartbreak in under three seconds.” Some users are comforted, but others feel exposed, even manipulated.

This performance isn’t limited to the workplace. A popular therapy app, EmotiCare, now coaches users to express gratitude or resolve conflict through AI-driven prompts. Lila, a college student, credits the app with helping her through a breakup. “But sometimes, I wish someone actually cared,” she confides.

Tech theorists call it the “empathy illusion”—a world where care is perfectly modeled, but never genuinely felt. For many, the difference is obvious. For others, the line keeps blurring.

False Comfort in a Real Crisis

When crises hit, the gap between algorithmic and authentic empathy becomes glaring. At SummitBank, a systems glitch triggered hundreds of overdraft fees, leaving customers panicked. The AI help desk responded instantly, expressing regret and offering credits. Yet, after the dust settled, complaints lingered: “The apology felt rehearsed,” a customer wrote. “Does anyone there actually care?”

Inside the company, the head of client relations, Pierre, noticed a shift. Employees relied on scripts suggested by AI, losing the habit of thinking—and feeling—on their own. “We fixed the numbers, but lost touch with the people,” he confessed to a friend over late-night drinks.

In another case, a travel startup faced a PR nightmare when stranded passengers vented on social media. Their digital crisis manager replied with flawless empathy, but missed subtle cultural cues that only a real human could understand. The fallout was swift: an apology tour led by actual staff, who rebuilt trust face-to-face.

Algorithmic empathy is best at smoothing routine pain, but when disaster or tragedy strikes, it can ring hollow. Maya, the analyst, recalls a bot checking in after her father’s funeral. “The words were right, but it made me feel lonelier, not less,” she says quietly.

Research shows that, while most people appreciate speed and politeness, they yearn for connection that feels unpredictable, imperfect, and unmistakably human. The crisis, then, is not technical but existential.

When the Bots Get It Wrong

Even the best empathy engines fail. At ZenFlow, a meditation app, users once received a barrage of “uplifting messages” during a national tragedy. The tone-deaf timing sparked outrage, leading to public apologies and a sharp drop in downloads. Their product manager, Rhea, now triple-checks settings and tests for “sensitivity gaps.”

A well-known streaming service once rolled out “emotion-driven recommendations” during the holidays. Some users found themselves bombarded with breakup movies after a minor spat with a roommate. “My app thinks I’m heartbroken,” tweeted one user, “when really, I just want a comedy.”

Mistakes like these erode trust, leaving users wary of sharing honest data. In a tech startup, a team lead named Amir discovered that the wellness tracker sent “fatigue alerts” to HR, triggering awkward wellness checks during crunch time. His team began using code words to avoid unwanted interventions.

The deeper issue is context. No matter how sophisticated, algorithms lack the lived experience, intuition, and improvisation that come from real care. At a luxury hotel, a guest received a “Welcome back, and we’re here for you!” message during a divorce, prompting embarrassment instead of comfort.

These moments spark uncomfortable questions: Is machine empathy worth the risk? When the illusion breaks, does it leave people better or worse than before?

The Seduction of Predictable Kindness

Despite missteps, millions grow accustomed to the warm glow of digital understanding. At WellSpace, a corporate wellness firm, employees now rely on AI check-ins as a daily ritual. Their HR head, Sasha, shares, “It keeps stress down and morale up, even if I know it’s scripted.” Over time, colleagues come to expect praise, encouragement, even gentle reminders—delivered on schedule, without fail.

For some, the predictability is a relief. A young teacher, Ethan, uses a chatbot therapist who never forgets an anniversary or birthday. “It’s like having someone who’s always happy to see you,” he jokes. Yet, when the bot’s responses grew repetitive, he felt an odd emptiness. “I want real joy, not just polite cheer.”

Businesses bank on this comfort. Loyalty programs, customer retention tools, and employee engagement platforms all promise better outcomes through algorithmic warmth. The strategy works: users reward brands that “care,” even when the care is just code.

But psychologists warn of “empathy fatigue.” When every app, service, or employer delivers support by formula, people risk losing the skills for messy, uncertain compassion. The kindness feels real—until it isn’t.

Some push back. An entrepreneur, Mina, ended her subscription to an AI-driven support group, preferring the awkward pauses and rambling stories of her local meetup. “I want the real thing, even when it hurts,” she insists.

Reclaiming Authentic Feeling

A movement now grows among those who crave more than simulated sentiment. At Authenticity Project, a grassroots initiative in Berlin, strangers meet to practice “live listening”—no scripts, no filters, no bots. Organizer Kai shares, “We’re all out of practice, but the tears and laughter are real.”

Leaders, too, experiment with unplugging. At SparkAgency, the CEO banned auto-responses after noticing a dip in team creativity. Instead, employees swapped hand-written notes, awkward jokes, and unplanned praise. The shift wasn’t always efficient, but trust soared, and turnover dropped.

Still, machine empathy is here to stay. The challenge is finding balance: using algorithms for what they do best, while making space for surprise, vulnerability, and imperfection. A social worker, Priya, pairs AI check-ins with community circles, teaching both tech literacy and emotional skill.

Some companies offer “empathy training for bots”—programming for more nuance, less cliché. Yet the deepest breakthroughs come when people embrace their own discomfort, daring to be present without a safety net.

The lesson is simple, and hard: Real care takes time, risk, and sometimes silence. Faked empathy comforts for a moment, but only truth builds trust that lasts.

Exit Wounds: The Price of Pretend Compassion

In the dark, Marissa shuts her laptop, the screen’s glow fading from her face. Outside, city lights pulse, each window a story waiting for real connection. She stands alone, caught between the warmth of scripted kindness and the cold honesty of solitude. Down the street, a friend laughs, a stranger sobs, a couple argues in the soft light of a bar—no bots, no buffers, only the messy, electric risk of being truly seen.

The world spins on, full of feelings that can’t be optimized or explained away. Machines may perfect the script, but the ache for authentic touch will always linger in the pause after the message ends. You can settle for comfort—or reach for the heartbreak and wonder of the real thing.