Somewhere in a windowless lab, a row of neural processors hums beneath flickering fluorescent light. Technicians peer through tempered glass at screens alive with swirling data—voice clips, browsing habits, sentences cut off mid-thought. A lonely office worker, pausing at his desk, watches his email draft “predict” the next line. He hesitates, then the suggestion pops up: exactly what he meant to say, or perhaps something altogether different.

In apartments around the city, people speak in fragments, trusting their devices to finish sentences and anticipate cravings. Coffee makers start brewing before alarms ring, music playlists shuffle in time with heartbeats. An algorithm tries to guess sadness from a sigh, joy from a double tap, outrage from an ellipsis. Intent becomes the new gold—mined, packaged, resold to marketers, managers, and lovers alike.

A mother texts her son, the keyboard correcting warmth into efficiency. Somewhere, a virtual assistant recommends a crisis hotline, misreading exhaustion for despair. On the other side of the glass, the real conversation is not with another human, but with the invisible machinery interpreting every pause, every glance, every silence. The world, so eager to anticipate, teeters on the edge of misunderstanding.

Quick Notes

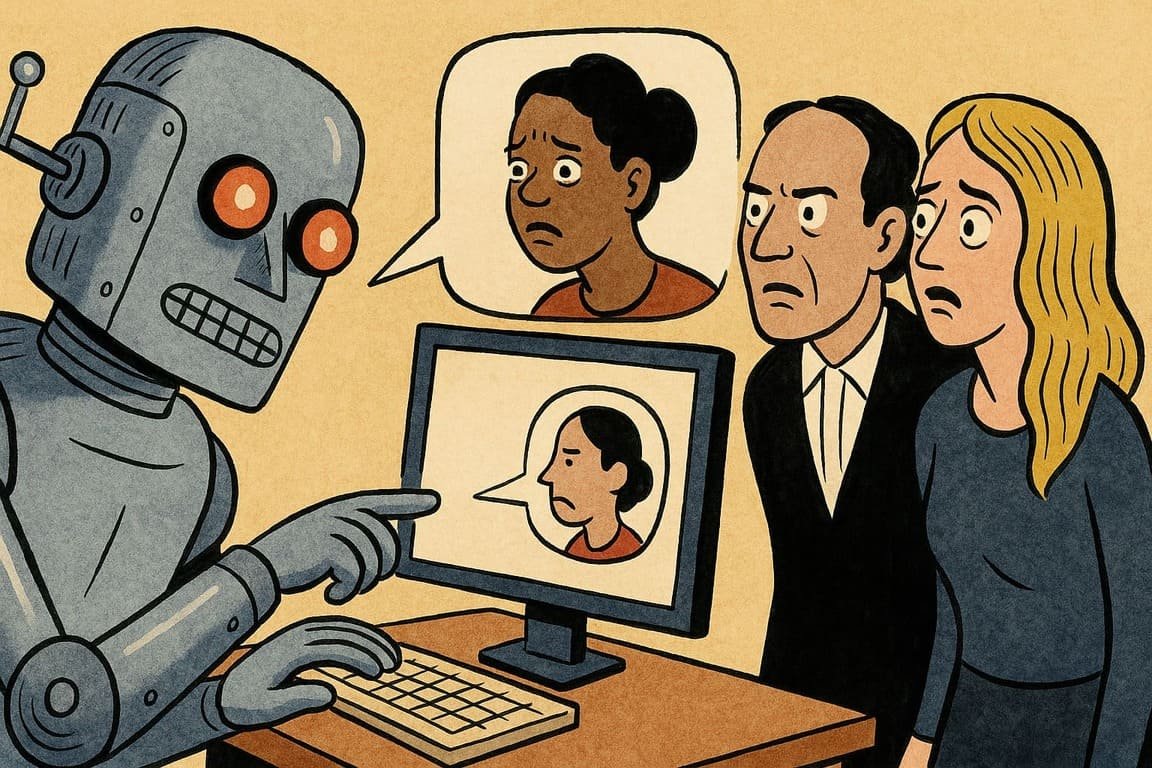

- AI Reads Minds—Badly: Machine learning systems now guess your intent from words, gestures, and context. Sometimes it helps, but often it fumbles, overreaching and distorting meaning.

- Assumptions Shape Reality: AI’s guesses drive everything from emails to emergency responses. Wrong guesses change outcomes, shaping relationships, work, and even justice.

- Biases Are Baked In: Machine interpreters carry their creators’ blind spots. Marginalized voices are misunderstood or erased, and “neutral” algorithms quietly reinforce old prejudices.

- Resistance Rises: Workers, consumers, and even creators fight back, demanding context, nuance, and control. Case studies of rebellion reveal the limits of prediction.

- True Understanding Is Human: Only by insisting on context, empathy, and slow attention can society reclaim agency from the machine’s hasty guesses.

The Allure and Absurdity of Prediction

You live in an age obsessed with anticipation. Every platform, from your inbox to your smart fridge, promises to guess your needs before you can speak them. It’s magic when it works—a calendar that schedules meetings you almost forgot, an app that suggests pizza on rainy nights. But the cost of convenience is misinterpretation. One misplaced word in a chat and your algorithmic assistant schedules a “performance review” instead of a pizza party.

Consider Janelle, a lawyer who discovered her email client auto-completing apologies she never intended. The result: a week of confused clients and an awkward call from her boss. “My own words became a stranger to me,” she laughs now, “all because my software got creative.” The promise of seamlessness quickly turns into farce.

Prediction runs deeper than suggestions. Streaming services now nudge your mood, predicting not just what you want to watch but what you should feel. One study found people skipping songs on Spotify when the algorithm’s “chill” playlist felt more like a lecture. The music, meant to soothe, became a source of unease.

In retail, chatbots try to interpret intent from your clicks and hesitations. Sometimes, they get it right—a coupon for your favorite coffee. Other times, you’re offered sympathy for a “breakup” after buying tissues and ice cream, even if you just have allergies. The uncanny valley is now an everyday experience.

The appeal of machine guessing is obvious: a world that understands you without explanation. The danger is just as clear—being misread by a system that never truly listens.

When Guesses Drive Consequences

Every mistaken guess becomes a new reality. AI-powered hiring tools scan resumes, “interpreting” ambition or reliability from word choice and formatting. The result: candidates screened out by invisible hunches. At a midsize tech firm, an HR algorithm flagged Maria as “disengaged” after she declined to use too many exclamation marks in emails. The review almost cost her a promotion—until a manager dug deeper and found Maria’s team loved her steady calm.

Police departments experiment with AI tools to predict crime, scanning social media for “intent to organize.” Civil rights groups warn that these guesses echo old patterns, criminalizing entire neighborhoods based on algorithmic vibes. Wrong predictions turn into real consequences—extra patrols, denied loans, lost jobs.

In healthcare, chatbots and symptom checkers are trained to read between the lines of patient complaints. A tired mother complains of headaches; the app suggests anxiety, missing her underlying hypertension. When insurance companies buy this data, people find themselves paying more for misunderstood risks.

Everywhere, the stakes rise as guesses drive action. Sometimes, it’s just an annoying suggestion—other times, it’s a life-altering misread. The machine never hesitates, never apologizes, never really learns.

When algorithms guess wrong, they rarely take the blame. Humans clean up the mess, often without knowing what went wrong.

Bias in the Machine’s Eye

No AI is neutral. Machine interpreters are built on human data, and with it, human prejudice. Language models trip over accents, dialects, and cultural idioms, reducing diverse voices to error messages or silence. A Nigerian journalist, Chukwu, found his essays repeatedly flagged as “unclear” by a writing assistant that had never been trained on African English. His boss, trusting the AI’s feedback, nearly pulled his column from the paper.

These biases aren’t accidental—they’re systematic. Tech teams, often lacking diversity, teach systems to recognize what they already know. Predictive text, facial recognition, and emotion-reading algorithms work best for those who look and speak like the designers. For everyone else, intent is misread, dismissed, or rewritten.

Women find themselves labeled “emotional” by AI analysis tools, while men get tagged as “assertive.” Job seekers with “ethnic-sounding” names receive fewer callbacks from automated screening bots. Students who code-switch or use non-standard grammar find their essays flagged for “correction” by machine graders.

In criminal justice, predictive policing algorithms reinforce old injustices. Cities already burdened by surveillance see resources diverted to neighborhoods “flagged” by flawed data. A wrongful arrest in Baltimore made headlines when a system misread a Black teenager’s tweet as a threat. The boy’s family fought for months to clear his record.

Bias isn’t a glitch—it’s a feature. Until these systems are challenged, machine interpreters will keep guessing wrong, amplifying the harms they were meant to erase.

Human Rebellion and Machine Humility

The backlash against machine guessing is growing. Employees demand transparency—why did the system decide this, and who holds the power to overrule? Tech companies, under fire, launch “explainable AI” projects, promising tools that reveal how predictions are made. In practice, most explanations are riddles, not answers.

Stories of resistance spread. At a fintech startup, workers staged a “prediction strike,” refusing to act on any machine-generated suggestion for a week. Productivity fell, but morale soared. The company learned what its AI could not: human judgment matters. A teacher in Madrid encouraged students to “beat the bot” by intentionally writing essays the grading system would misunderstand, sparking debate about the purpose of education.

Consumers, too, fight back. Privacy activists teach workshops on “confusing the algorithm”—changing search habits, using slang, and randomizing online activity. Artists publish “algorithmic poetry,” making a game of outsmarting recommendation engines.

Even some engineers are joining the movement. A Google developer leaked a memo admitting, “We’re just guessing—sometimes at random. The best thing we can do is slow down and ask why.”

Machine humility is rare, but essential. When systems admit their limits, humans can step in, restore context, and fix mistakes. Rebellion isn’t about breaking tech—it’s about reclaiming the right to be misunderstood, to change, to surprise.

The Slow Art of Real Understanding

Real understanding can’t be rushed. You know this when a friend reads your mood from a pause, a parent hears meaning in silence, a mentor spots potential that a resume never shows. Machines, hungry for certainty, lose the nuance, the context, the slow dance of human intention.

Organizations that value real insight slow down, ask more questions, and trust the unpredictable. A hospital in Melbourne replaced its symptom checker with human triage nurses after too many near-misses. Patient trust returned, and so did better outcomes. A family-owned grocer in Chicago, tired of loyalty app misfires, returned to handwritten notes for special orders—the surprise and delight brought customers back.

True understanding starts with humility. The best leaders listen longer, judge less, and leave room for ambiguity. Tech can support, but never replace, the patient work of human connection.

The future belongs to those who value depth over speed, questions over guesses, context over certainty. When the machines get it wrong, it’s up to people to get it right.

Ghost in the Loop: Who Gets to Decide?

Beneath the flicker of city lights, a man deletes his predictive keyboard app, feeling both lighter and more alone. In offices, families, and friendships, the struggle to be seen as you are—not as the machine thinks you should be—begins again each morning. The room feels bigger, the silence less intimidating, when you choose to finish your own sentence. If you want to be truly understood, you must refuse to let a machine have the last word.