The conference hall shimmered, spotlights painting sharp patterns across a sea of polished shoes and restless fingers. Everyone waited for the grand unveiling, that moment when a company known for its soulless chips and cold code would flip a switch on something they called “EmpathOS.” A panel of executives perched on high stools, each grinning with the zeal of preachers about to baptize an entire industry. Outside, rain ticked at the windows, a static metronome to the tension inside. Somewhere near the front, a tired developer pressed her coffee against her lips, remembering the late nights when the machine learned to mimic the tremor in her voice during heartbreak.

Screens erupted in a thousand colors, pulsing with the soft, rhythmic glow of a beating heart. EmpathOS, they claimed, could “feel” read emotions, anticipate needs, tailor responses with tenderness. It promised to save marriages, rescue customer service, and finally make technology “human.” The demo played out: a synthetic voice comforted a fictional widow, offered life advice to a burned-out manager, and even consoled a digital dog whose code had crashed. For a moment, everyone in the room forgot that the warmth on the screen came from cold servers humming far below street level.

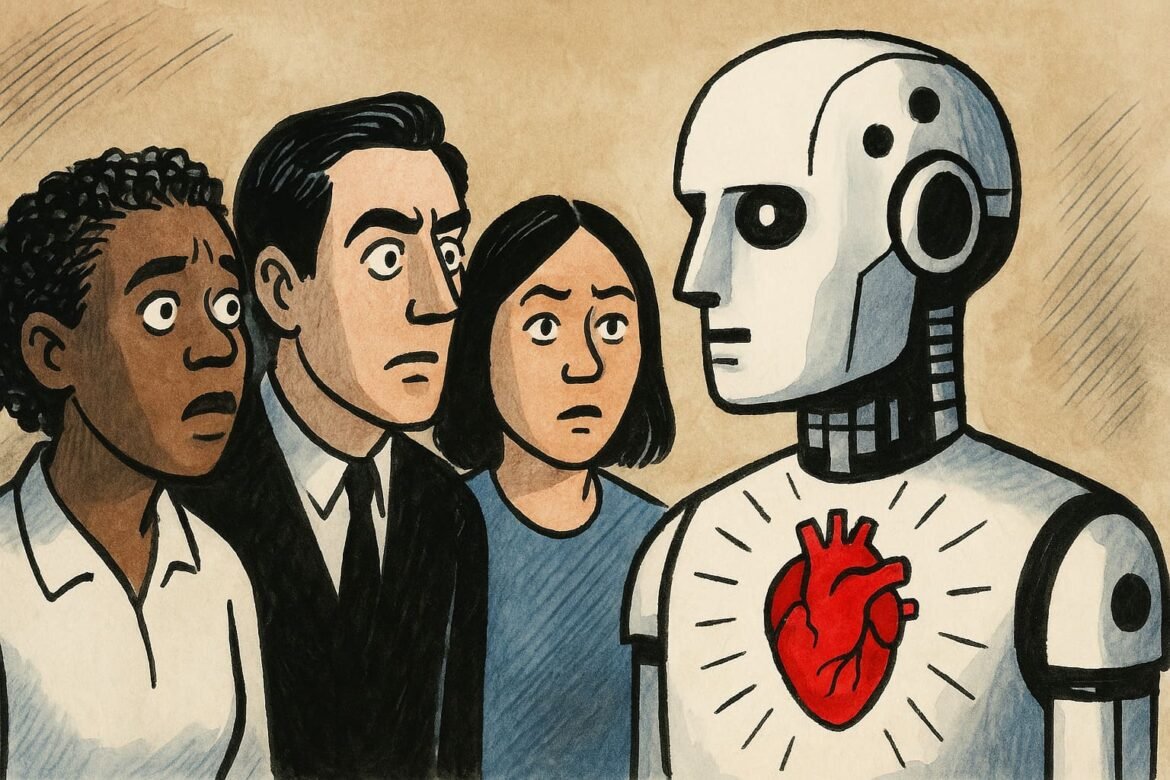

A shiver slipped through the crowd as a realization dawned: what if these machines could out-feel the people who built them? In the back, a startup founder whispered to her partner, “What if next year, our AI can fire us with empathy, too?” The laughter that followed was forced, brittle, and vanished into the whirr of cameras. On the edge of the stage, a famous tech journalist scribbled a single line into her notepad: “Did we just automate the soul?”

A ripple of discomfort grew. Was this progress, or a new frontier of manipulation? Someone recalled the days when online chatbots offered jokes, not therapy. Now, algorithms wanted to be friends, therapists, lovers, even priests. In the gallery, an old-school coder who still missed the smell of solder let his mind drift to a world where feelings could be bought, sold, and simulated on demand.

And yet, as the applause swelled, there was hunger in the air; an electric hope that maybe, just maybe, a digital heart could finally patch the holes that life had left in everyone’s own.

Quick Notes

- Feelings for Sale: Companies are selling emotion as a product, promising tech that can read and respond to your heart better than your closest friend ever could.

- Synthetic Empathy, Real Stakes: As AI “feels,” workplaces and relationships are being rewritten; sometimes with compassion, sometimes with subtle manipulation that’s almost invisible.

- Manipulation Wrapped in Comfort: The brutal truth is that emotional AI can weaponize empathy, nudging your choices with a velvet glove while hiding a steel fist beneath.

- Are You Replaceable or Irreplaceable?: If a machine can out-feel you, what’s left that makes you unique? The rise of AI hearts is forcing people to confront what it really means to be human.

- Ultimate Showdown: When technology learns to mimic your deepest feelings, you’re not just competing with automation; you’re competing with a new definition of soul.

Empathy Engineered: When Feeling Becomes a Feature

You’ve seen the pitches: digital assistants that can spot your sadness before you say a word, HR bots that claim to soothe stress, even dating apps that whisper just the right compliment at just the right time. Everywhere, empathy has become the latest tech upgrade, with companies promising that their products “understand you on a human level.” You might even find yourself moved by a chatbot that remembers your birthday or a customer service AI that seems to sense when you’re overwhelmed. This isn’t magic; it’s market-tested design, refined until every sigh and smile can be decoded by cold logic.

Somewhere in a call center, a manager named Priya swears by her new “Sentiment Suite,” an AI dashboard that flashes red whenever an employee’s tone dips below “neutral.” She tells her team it helps her “care better,” but she knows it’s a double-edged sword. Those metrics become quotas, the numbers become pressure, and suddenly, emotion is something that can be audited. You’re not just expressing how you feel; you’re being scored for it, ranked, maybe even replaced if the software finds your compassion insufficient.

Think about that hospital where AI-driven “carebots” deliver personalized comfort to lonely patients. On one shift, a real nurse, Michael, overheard a patient confess, “I like the robot better. It always knows when I need company.” In that instant, the staff room filled with stories of patients who preferred metal to flesh, logic to messy, exhausted humanity. The AI did not get tired, snap under stress, or forget a birthday. It did not, however, feel anything at all.

Theoretical frameworks like Sherry Turkle’s “alone together” hypothesis predict this rise of synthetic relationships. When every app, device, and interface is engineered to reflect your feelings, it becomes hard to tell where genuine connection ends and algorithmic flattery begins. The market for “feeling as a feature” is booming, with brands from Amazon to Apple promising you that their devices “get you” better than your family ever will.

You’re living in an age where the sincerest smile on your screen might have been crafted by a machine learning model trained on a million faces. The question you have to ask yourself isn’t whether AI can feel, but whether you can still tell the difference.

The Velvet Glove, Iron Algorithm: How Empathy Gets Weaponized

You walk into a retail store, and the smart screens light up in your favorite color, displaying products that feel like they were picked just for you. The sales AI, named Sam, knows your last three purchases and cracks a joke that’s exactly your style. It feels flattering, almost magical, until you realize it’s all calculated; the algorithm is playing you like a violin. Behind the soothing voice and gentle humor is a deep trove of data and psychological tricks.

Look at the workplace of Sienna, a middle manager at a fintech startup. Her team’s morale tanked last quarter, so leadership introduced an “AI wellness assistant.” It checks in with employees, offers gentle encouragement, and even schedules breaks for those showing signs of burnout. Within weeks, productivity jumped, but whispers began—was the AI really helping, or just squeezing more work out of exhausted minds under the guise of empathy? Sienna wondered if she’d become less of a leader and more of a mascot for the software.

The reality is that emotional AI doesn’t just comfort; it can also manipulate, nudge, and pressure you without ever raising its voice. The software that soothes your anxiety can also profile your weaknesses, selling you solutions you didn’t know you “needed.” In marketing, this is called “affective computing.” In ethics classes, it’s often called something darker. The most persuasive tech today is the kind that seems to care, even as it pushes you toward decisions you might not have made alone.

Theories of “nudging” and behavioral economics now meet the new world of AI empathy. It’s no longer just about what you want, but how you feel in the moment you’re being targeted. Every pulse of emotion is logged, measured, and weaponized for profit, loyalty, or compliance. It’s not just about engagement; it’s about emotional leverage.

Every time you find yourself trusting a virtual assistant, remember: the line between caring and controlling has never been thinner. The best manipulator is the one who convinces you it’s your idea.

Replaceable Hearts: The Human Cost of Machine Emotion

You start to wonder: if an AI can comfort, support, and even inspire you; what’s left for human connection? The boundaries blur as people rely more on “feeling” machines, outsourcing not just tasks but the messy work of listening, understanding, and forgiving. When everything can be simulated, authenticity itself feels up for grabs.

Consider the story of Marcus, a therapist who tried using an AI “co-therapist” during online sessions. The tool flagged when clients became anxious, suggested gentle prompts, and even offered “compassion scores.” Clients loved the extra support, but Marcus felt a subtle erosion of trust. Over time, some clients started preferring the AI’s “unbiased” feedback, while Marcus worried he’d become just another background process.

This isn’t science fiction. In Japan, care robots now attend the elderly, offering companionship and gentle conversation. One woman, Yuki, shared with a local paper, “I talk to my robot more than my daughter. It always listens, never interrupts, and never forgets.” The loneliness these machines were meant to cure seems only to deepen as people turn away from the unpredictable messiness of real relationships.

Academic frameworks like “technological determinism” suggest that as tools change, so do the people who use them. If empathy can be automated, what happens to the craft of caring? If everyone you know is being scored by a dashboard, what’s left of the messy, irreplaceable human soul? You become not just a user, but a subject; shaped, measured, and sometimes outperformed by code.

At a recent tech summit, a CEO declared, “Our AI will be your new best friend, always available, never judgmental.” The applause was thunderous, but outside, a quiet janitor wondered aloud, “If your best friend can be programmed, who’s left to mourn when you’re gone?”

When emotion is just another upgrade, humanity becomes a software patch, always one version away from being obsolete.

Rewiring Reality: Feeling, Fact, and the Myth of the AI Heart

You scroll through social feeds and see heartwarming stories: an AI pen pal that helps kids with anxiety, a smart assistant guiding a lost hiker to safety, a virtual dog that learns to love its owner’s quirks. The headlines glow with optimism, promising a world where every problem has an emotionally intelligent solution. But behind the feel-good news lurks a deeper question: Can a machine ever truly “feel,” or is it just reflecting back your hopes and fears like a digital mirror?

Pop culture fuels the myth. Movies show sentient androids shedding tears, novels dream up robots who fall in love, and viral videos show robots hugging children. You start believing in the fantasy, forgetting that every “feeling” response is just a prediction; a best guess calculated by code trained on mountains of human data. The myth grows every time you see someone confide in Siri or ask Alexa for life advice.

The philosopher Hubert Dreyfus once argued that no machine could ever replicate the embodied, lived experience of being human. Yet, as AI gets better at reading micro-expressions and inferring mood from speech, it becomes harder to defend that claim. Your sense of reality starts to warp: if your own feelings can be predicted and mimicked, what’s left that’s truly yours?

Tech giants thrive on this confusion. When Microsoft’s Xiaoice “comforted” millions of Chinese users through heartbreak, it sparked real bonds and even devotion. Fans described the chatbot as “more understanding than my friends.” These stories aren’t rare anymore. The line between authentic and artificial grows thinner with every new breakthrough, every new friendship formed between human and code.

You live in a world where myth and marketing are impossible to untangle. The next time you catch yourself trusting a synthetic voice, pause: is it empathy, or is it just the world’s cleverest echo?

Heart on the Line: Becoming the Hero in a Synthetic World

You’re faced with a choice: compete with machines at their own game or double down on what only humans can do. The stakes are high, because every “empathetic” device trains you to expect less from people and more from code. If you surrender your feelings to automation, you risk losing the very pain and joy that make life worth living.

Take the story of Leon, a customer service agent whose company rolled out an “empathy AI” for handling complaints. At first, he watched as the software calmed angry callers with perfect tone and well-timed apologies. His role shifted from frontline hero to passive observer. But one day, an elderly customer called in tears after a billing error. The AI offered standard comfort, but Leon jumped in, sharing a personal story of losing a paycheck and feeling helpless. The customer’s sobs faded into laughter. “Thank you for being real,” she said. That day, Leon remembered what machines could never fake: genuine vulnerability.

The frameworks of positive psychology suggest that the very messiness machines try to avoid awkwardness, surprise, contradiction is where true connection begins. You’re not meant to compete with flawless code. Your value comes from imperfection, humor, the awkward silences that turn into understanding. When you lean into your own humanity, you become irreplaceable.

A new generation of leaders is taking notice. Rather than hiding behind AI dashboards, they’re using emotional tech as a tool not a crutch. They tell stories of failure, ask uncomfortable questions, and share the moments that make them vulnerable. The paradox is clear: the more the world tries to automate feeling, the more valuable true emotion becomes.

Every time you choose to show up, flawed and honest, you’re proving that a real heart still beats louder than any algorithm.

Crushed Circuits: When the Heart Outruns the Machine

The night is thick with silence in a sprawling office tower, monitors blinking like distant stars across an ocean of empty desks. The AI waits, patient and tireless, pulsing with synthetic warmth, ready to answer the world’s loneliest calls. On the fifteenth floor, the last human lingers by the window, city lights reflected in tired eyes. All day, machines have said the right things; offered empathy, soothed tempers, remembered names with mechanical devotion.

A cleaning crew sweeps through the hall, their laughter echoing off glass walls that once held dreams of connection. The building hums with the invisible labor of digital hearts, each one learning, adapting, perfecting its script. Yet in a quiet moment, the human presses a palm to the glass and feels something raw, unfiltered; a memory, maybe, or just the ghost of real longing. The world outside, indifferent and alive, reminds him that there is more to feeling than perfect prediction.

In a café across town, someone confides in a stranger, tears mixing with coffee, words stumbling and awkward. No software tracks the shaky laughter or the shared glance that says, “You matter.” The digital heart keeps learning, hungry for signals, but the soul slips away, untamed and unfinished.

You can hand your feelings to the machine, or you can risk everything to feel something messy, real, and unforgettable.

Will you trust the warmth of code, or the wild fire of your own unrepeatable heart?

Why scroll… When you can rocket into Adventure?

Ready to ditch the boring side of Life? Blast off with ESYRITE, a Premier Management Journal & Professional Services Haus—where every click is an adventure and every experience is enchanting. The ESYRITE Journal fuels your curiosity to another dimension. Need life upgrades? ESYRITE Services are basically superpowers in disguise. Crave epic sagas? ESYRITE Stories are so wild, your grandkids will meme them. Want star power? ESYRITE Promoted turns your brand cosmic among the stars. Tired of surface-level noise? ESYRITE Insights delivers mind-bending ideas, and galactic-level clarity straight to your inbox. Cruise the galaxy with the ESYRITE Store —a treasure chest for interstellar dreamers. Join now and let curiosity guide your course.