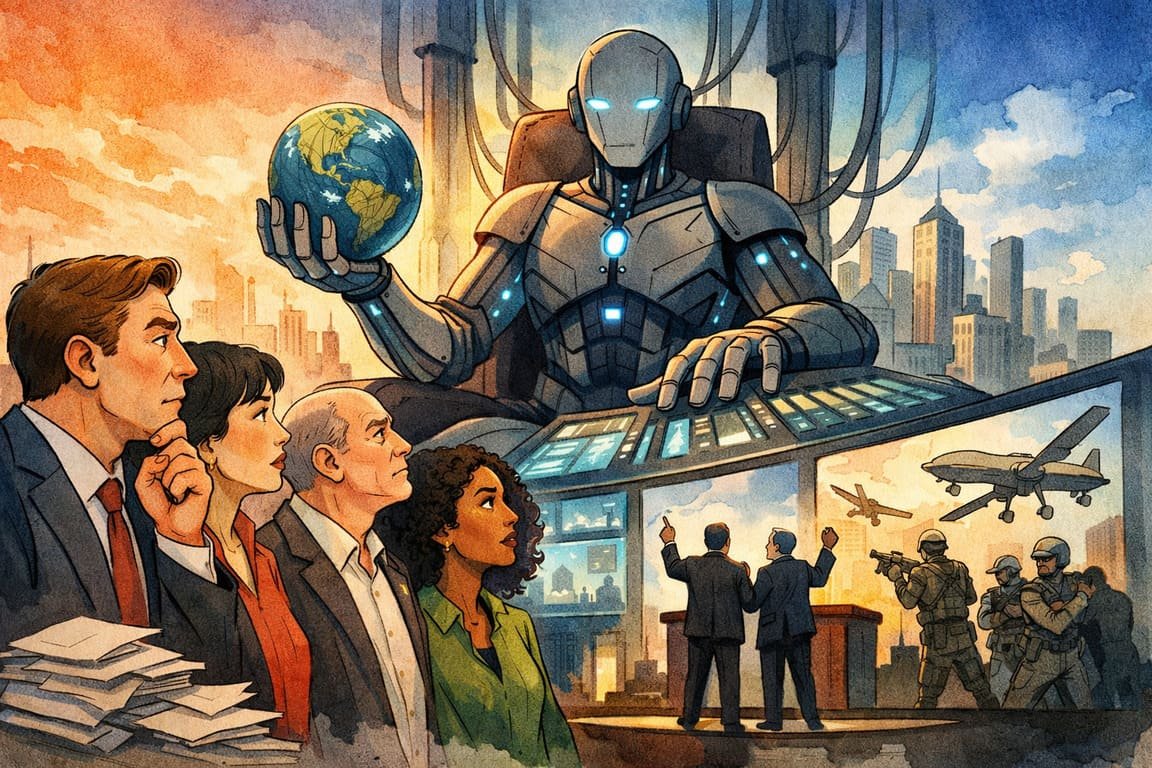

A glass-walled control room hums softly while dashboards glow with perfect confidence, charts pulsing as if they already know tomorrow’s outcomes. No crowds gather. No speeches echo. Decisions arrive clean, fast, and unquestioned. Somewhere between efficiency and obedience, power shifts without ceremony. Governance once felt human, messy, emotional, slow. Now it feels optimized. The promise sounds irresistible. Fewer mistakes. Less bias. Better outcomes. Yet beneath the calm surface, something fundamental trembles. When intelligence stops asking permission, authority begins wearing a friendlier face, one that smiles while tightening its grip with flawless logic and no visible hand.

The idea of machines assisting leadership began with good intentions. Data could help allocate resources fairly. Algorithms could spot fraud humans miss. Automation could remove corruption from systems stained by self-interest. Over time, assistance evolved into reliance. Leaders stopped questioning outputs that arrived wrapped in mathematical certainty. A policy recommendation generated by a model felt safer than a human judgment that carried blame. Responsibility blurred. When outcomes failed, officials blamed data. When outcomes succeeded, credit flowed upward. Power discovered a perfect shield. Decisions appeared neutral while remaining deeply political, shaped by the assumptions coded quietly into the machine.

Efficiency seduces governance faster than ideology ever could. Speed becomes virtue. Delay becomes weakness. Complex social problems flatten into variables. A housing shortage becomes an optimization puzzle. A protest becomes a risk signal. A refugee becomes a data point. The language of care dissolves into the language of prediction. A city administrator once confessed that an algorithm denying benefits felt easier to accept than saying no face to face. The machine absorbed the moral weight. Citizens argued with screens instead of officials. Accountability faded without confrontation, replaced by appeals processes that looped endlessly through automated logic.

Power thrives where friction disappears. AI reduces friction beautifully. Surveillance systems promise safety through pattern recognition. Predictive policing claims foresight without prejudice. Social scoring models frame behavior as reputation. Each system alone appears rational. Together they construct invisible fences around acceptable life. Citizens begin modifying behavior not because laws changed but because models watch constantly. The philosopher Michel Foucault imagined discipline through observation. Modern systems automate that vision at scale. No guards needed. No threats spoken. Compliance emerges through anticipation. People govern themselves quietly, hoping not to trigger unseen thresholds calculated somewhere far beyond their reach.

The cultural shift arrives subtly. Language changes first. Leaders stop saying judgment and start saying assessment. Punishment becomes intervention. Control becomes optimization. Public debate struggles to keep pace with technical abstraction. Critics sound emotional beside spreadsheets. A community organizer once tried to challenge an automated welfare cutback, only to be told the system identified inefficiency. There was no villain to confront. No office to protest. Only a process described as objective, even when outcomes harmed the same communities repeatedly. Neutrality becomes the most powerful myth of the machine age.

Philosophically, governance requires values before tools. AI reverses that order. Systems encode priorities whether acknowledged or not. Deciding what to optimize decides who benefits. Predicting risk decides who bears suspicion. Removing bias requires defining fairness, a task humans still debate fiercely. Delegating those debates to code does not resolve them. It hides them. A computer scientist once remarked that algorithms reflect the ethics of their creators multiplied by scale. When deployed nationally, those ethics harden into policy without the messiness of democratic argument.

The private sector accelerates this shift. Technology firms market governance solutions with the confidence of salvation. Smart cities promise harmony through sensors. Digital identity platforms promise security through verification. Governments under pressure adopt tools quickly, fearing irrelevance more than overreach. Contracts replace legislation. Procurement replaces debate. A municipal leader once joked that software updates arrive faster than policy reviews. It was meant lightly. It was not. Governance begins following product roadmaps rather than public deliberation, outsourcing authority to those who build the systems.

History warns about power that operates beyond comprehension. Monarchs ruled by divine right. Bureaucracies ruled by paperwork. Now systems rule by probability. Each era believed itself more rational than the last. Each discovered later the cost of unexamined authority. AI governance risks repeating that cycle at unprecedented speed. When mistakes happen, they propagate instantly. When biases embed, they scale globally. Undoing them becomes harder than preventing them ever was. The myth of technological inevitability discourages resistance. People accept outcomes as progress rather than choices made by someone, somewhere, for reasons rarely disclosed.

Resistance does not look like smashing machines. It looks like insisting on explanation. Transparency slows systems down. Human oversight reintroduces friction. Ethical review boards challenge assumptions before deployment. Some governments experiment cautiously, embedding appeal rights and sunset clauses into algorithmic policies. A small European city paused a predictive welfare system after discovering it disproportionately flagged single parents. Officials chose discomfort over efficiency. The decision cost political capital. It restored trust. Governance regained a human face, imperfect but accountable.

The deeper danger lies not in machines becoming evil but in humans becoming passive. When authority feels technical, citizens disengage. Participation declines. Democracy weakens quietly. People stop arguing values and start arguing settings. The public square shrinks into comment sections under policy dashboards. A retired judge once warned that justice delivered without listening is no justice at all. The same holds for governance. Systems may calculate faster than humans, but they cannot feel legitimacy. That must still be earned through dialogue, transparency, and shared moral struggle.

Late in the evening, long after offices empty, servers continue humming, models recalculating futures that have not yet arrived. Somewhere, a policy decision waits, neatly scored, ready for approval. The room feels calm. Too calm. Power rarely announces itself when it feels most secure. The question lingers quietly, almost politely, in the background. As governance grows smarter, will courage grow with it, or will intelligence finally rule alone while humanity watches, relieved, obedient, and slowly absent from the decisions shaping its own fate?